Memory leaks happen. And if you’re here, reading this, I’d bet you’re dealing with one. First things first - are you sure it’s a leak, and not bloat?

Okay, so it’s a leak. Much has been written about various tools for profiling a leak, understanding heap dumps, common causes of leaks, work being done to improve Ruby’s memory layout, and so much more. Ben Sheldon’s recent “The answer is in your heap: debugging a big memory increase in Ruby on Rails” is a great example. Ben mentions and shows how he and some teammates used several different tools to generate heap dumps, analyze and interrogate them, and ultimately find and fix the source of a leak in Rails itself.

These are all great resources, and can be crucial knowledge in our hunt for a leak.

But they all suppose we already have an idea of where to start looking, or use stripped down examples to showcase the tools.

The memory_profiler Gem can profile memory allocations around some suspect code, if we already know that code is suspect.

Derailed Benchmarks can get us

started on tracking down a leak in the overall stack, or a known problematic resource, and generate heap dumps for us.

Comparing those dumps with heapy can point us in the right direction by revealing memory being unexpectedly retained over time.

We can use sheap to track down exactly where problematic objects allocations are happening, once we’ve identified those problematic objects.

But what if we’ve reviewed all recent code changes, and nothing stands out? Or if the leaks don’t happen consistently, across all instances of the running app? Or memory starts leaking different times? Where do we even start?

That’s what I was asking myself recently with a decade old Rails app I work on. This is a real production app, driving substantial revenue. It serves a sustained load of 400-500 requests/second, with peaks into several thousands/second.

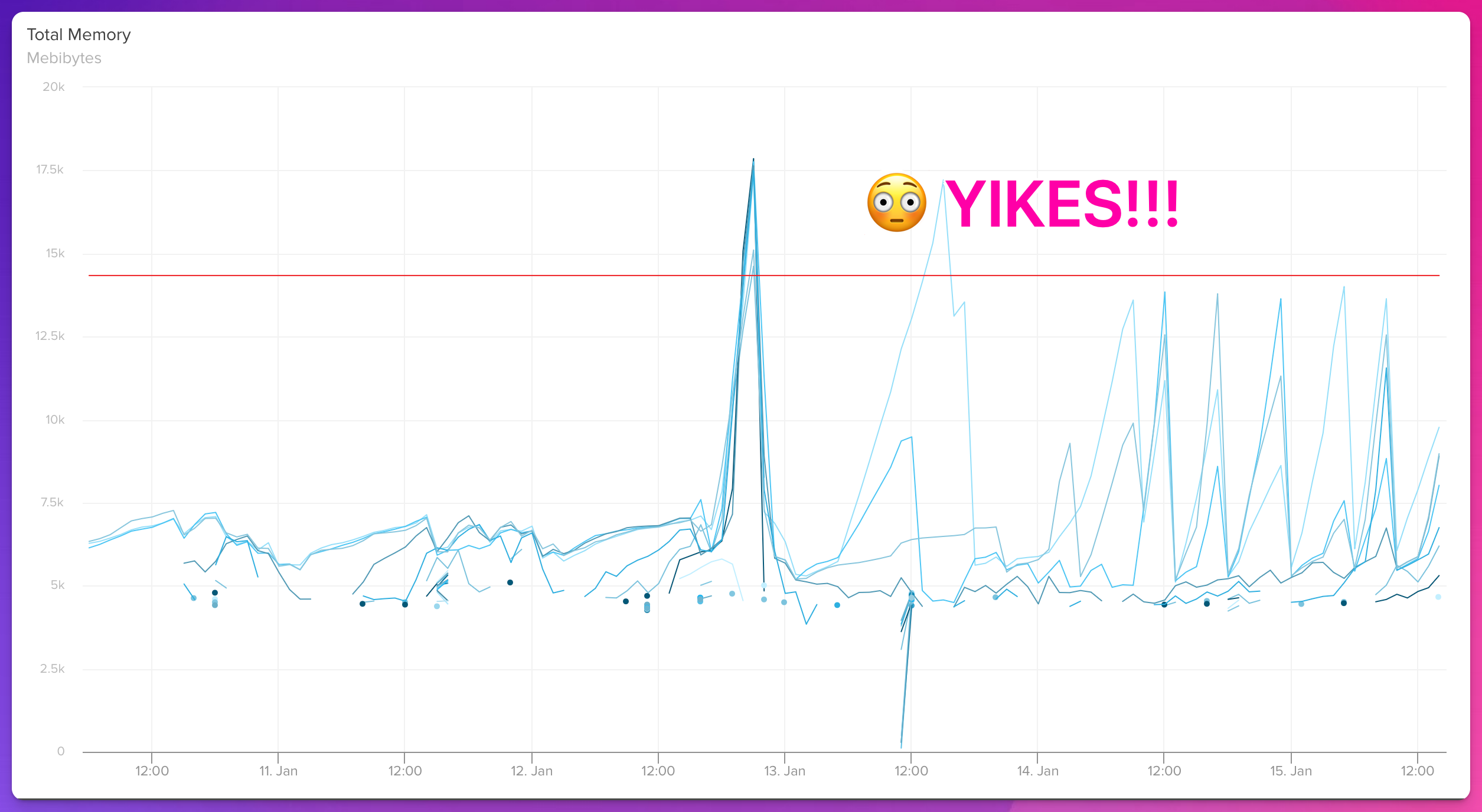

Then one, nondescript day, amongst the usual flow of deploys, memory started spiking. 📈 The pager started yelling. 📟 And we had what looked like a memory leak. 💧

🔥 First put out the fire

It certainly looked like a leak, rather than bloat, meaning we had one sure-fire way to temporarily fix the problem.

Restart things!

We run on Heroku, and what we’re seeing above are per-Dyno memory numbers.

In the short term, we’d either let our normal cadence of many-deploys-a-day restart our web instances, or we’d manually restart individual Dynos approaching their memory limit.

🔎 Look for suspect changes

With the fire under control we turned our attention to changes that happened in the hours before the first big spike. The graph above doesn’t show our deploy markers (they were too distracting at this granularity) but starting with the deploy just inside of the first spike and working backward, we audited all code changes going back three days.

We found a few changes that seemed like they could be related.

One of them introduced a memory leak in development mode due to Rails’ code reloading.

Another caused us to hit Redis more than we intended during certain request filtering.

The third looked like we were making more database calls, loading more ActiveRecord instances than we wanted (a classic N+1).

We fixed the first two and rolled back the third, deploying them one at a time.

The memory leak continued. 📈

No other code changes looked like they could cause a leak. But, we had made a few tooling changes to start collecting Ruby language and Puma pool usage metrics, so we backed those out and deployed. The memory leak continued. 📈

🧩 Look for patterns

Next we went looking for patterns to the memory growth.

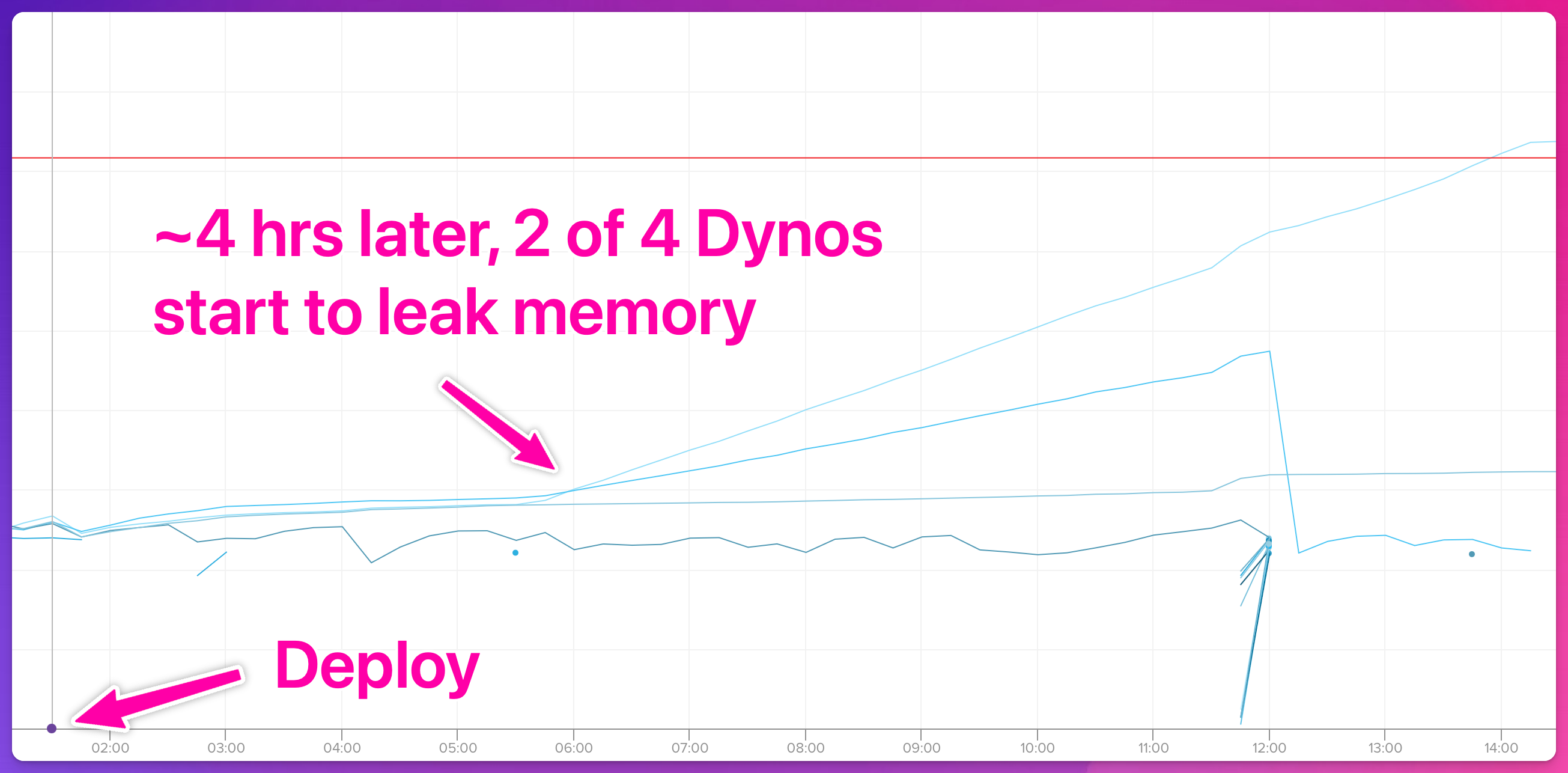

Of note, it was only our web Dynos that were leaking.

All of our Sidekiq and Delayed::Job (yes, both, for… reasons lost to history) Dynos looked just fine.

Also, not all web Dynos were always leaking memory.

Sometimes they’d go hours with relatively flat-ish memory usage consistent with a long-running web process.

Then one, or a few, or all of them would start to leak memory.

This made us suspicious that perhaps the leak was specific to a certain kind of traffic, rather than a problem in the stack, or with volume of traffic.

When we looked at our Puma workers, we also saw that not all workers on a single Dyno were leaking. We run Puma in clustered mode, with 12 worker processes for the 8 vCPU on each Dyno. It wasn’t unusual to see just a couple of the 12 processes were using up nearly all of the memory on the Dyno. i.e., a couple workers were leaking, while the rest were fine.

Due to aggressive sampling we were unable to use our OpenTelmetry Traces to match specific kinds of traffic to specific Dynos at times that correlated to the start of the leaks. But we still had a strong suspicion the leaks had something to do with particular traffic. One of the smart folks I work with had the idea to try correlating via our logs - which are not sampled. But the tooling there didn’t make that particularly easy. So we put that idea in our back pocket and moved on to another.

🚛 Go to the dumps!

If memory is being leaked, looking at dumps of Ruby’s memory heap is probably a good idea. After all, all of those very smart Internet Folks wrote lots of words about it.

Step 0: Get the tooling in place

We use rbtrace to attach to a running Ruby process, meaning it needs to be loaded into the running process.

Basically, make sure it’s in the Gemfile.

It’s generally fine to have this loaded in production.

But we’ve made it somewhat toggle-able; we can opt in/out of loading rbtrace via an Environment Variable:

gem "rbtrace", require: String(ENV.fetch("FEATURE_ENABLE_MEMORY_DUMPS", false)) == "true"

Step 1: Find a leaking process

Running on Heroku meant we needed to dump the heap of a live, running web process, from within a Dyno itself.

Luckily, Herkou and the Ruby community have already built all of the tooling necessary.

Using heroku ps:exec to open an SSH tunnel into a leaking Dyno, we needed to find a particular Puma worker process to dump.

We went to our old *nix friend ps.

ps -eo pid,ppid,comm,rss,vsz --sort -rss | grep ruby

This gave us a list of all of the Ruby processes running on the Dyno, sorted by their RSS (amount of memory being used by the process).

In the case of a web Dyno, most of them have the same PPID.

These are the Puma workers.

The process with a matching PID is the Puma primary (master) process.

It will look something similar for Sidekiq.

Other Ruby tools that run only a single process would look a little different.

Picking the process with the most memory (i.e., the leakiest of them) we use its PID to and rbtrace to start tracing memory allocations.

Step 2: Enable memory allocation tracing

We set that worker process ID as $DUMP_PID to be used as a variable in later steps.

This helps prevent silly typos and much face-into-keyboard-ing later on. 🤦

DUMP_PID=<pid>

# Turn on allocation tracking in the Ruby process.

# This will impact performance; it can use a lot of memory and CPU

rbtrace --pid="${DUMP_PID}" --eval="Thread.new{require 'objspace';ObjectSpace.trace_object_allocations_start}.join"

Step 3: Dump the heap

# Generate the first heap dump

rbtrace --pid="${DUMP_PID}" --eval="Thread.new{require 'objspace'; GC.start(); io=File.open('/tmp/heap-${DUMP_PID}.json', 'w'); ObjectSpace.dump_all(output: io); io.close}.join" --timeout=600

The .json file can be quite large.

On a leaking process that had been running for a few hours, it was 5-6GiB in size.

I’d recommend gzip-ing it.

gzip "/tmp/heap-${DUMP_PID}.json"

That will replace the .json dump file with a .json.gz file which could be up to an order of magnitude smaller.

Step 4: Fetch the dump

This will differ depending on where the application is deployed, but on Heroku we run the following (adjusting for exact file and Dyno names) from a shell on our local machine.

heroku ps:copy /tmp/heap-<pid>.json.gz --dyno=<web.1> --app=<app name>

Decompress and move the file somewhere organized.

I’d recommend renaming it based on which dump this is; we’ll grab at least three if we’re going to use heapy to try to find retained memory, for example.

mv heap-<pid>.json.gz ~/dumps/.

gzip --keep --decompress ~/dumps/heap-<pid>.json.gz

Repeat steps 3 and 4 to grab as many dumps as needed.

Step 5: Clean up after ourselves

Be sure to turn off the allocation tracking, and probably remove those dumps from the Dyno. Or just restart the Dyno.

rbtrace --pid="${DUMP_PID}" --eval="Thread.new{GC.start;require 'objspace';ObjectSpace.trace_object_allocations_stop;ObjectSpace.trace_object_allocations_clear}.join"

🧐 Analyze the heap dumps

We tried looking at the retained memory reports via heapy, and meandering the diffs via sheap, but with no particular spot to start looking, it felt like looking for a specific needle in a stack of needles.

(Thanks for that line, Tom Hanks.)

In hopes of seeing something that looked off, I went in search of a way to visualize the heap dumps.

And The Internet™ did not disappoint!

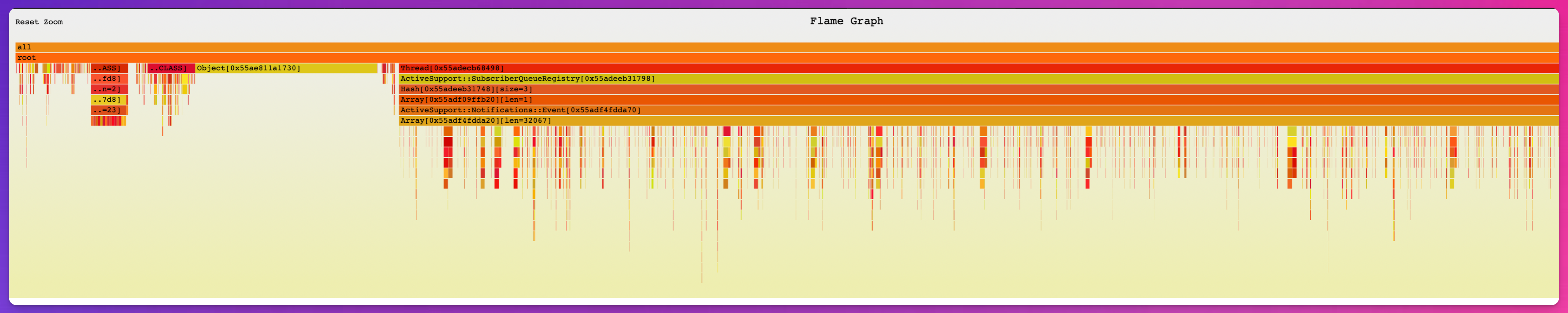

reap is a tool for analyzing a Ruby heap dump’s reference graph, and generating visualizations.

Including flame graphs!

Here’s a graphs of the third dump we took off of a leaking process, per the steps above.

reap path/to/heap-<pid>-2.json --count=15 --flamegraph=dump-2.svg

The way to read this is starting at the top and moving downward are references from a “root” (in the view of the Ruby Garbage Collector) to other objects. That is, objects further down are references by objects above them. And the more memory an object (or an object it references) is holding onto, the wider the cell in the graph.

Notice anything odd?

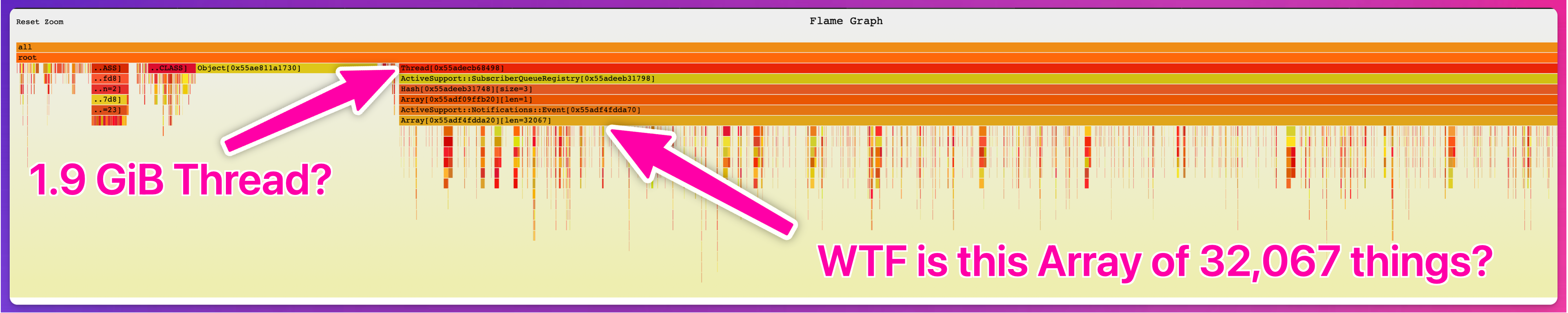

What’s up with that Thread holding 1.9GiB of memory?

Or really, it’s the Array at the bottom, holding onto references to 32,067 other objects, in turn holding 1.9GiB of memory.

So what the heck is that array?

Where did it come from?

Why is it referencing so much memory?

🧗 Go spelunking with sheap

Using the latest main branch from sheap (because some of the features like find_path weren’t yet released to RubyGems), we compared the second and third dumps.

It will take a while to parse these dumps, which were pushing 6GiB in our case. ☕️

~/code/jhawthorn/sheap/bin/sheap heap-<pid>-1.json heap-<pid>-2.json

That opens an IRB console where we can explore the dumps and the diff between them.

See the README (and the source code) for all that it can do.

irb: t = $after.at("0x55adecb68498")

===> <DATA 0x55adecb68498 VM/thread (137 refs)>

irb: $after.find_path(t)

===> [<ROOT vm (4014 refs)>, <DATA 0x55addb8deff8 ractor (31 refs)>, <DATA 0x55adecb68498 VM/thread (137 refs)>]

We first investigated that Thread to understand where it was coming from.

Our suspicion was that it was a background thread from one of our telemetry or metrics tools - maybe the OTel tracer, or error reporter, or something like that?

But, sheap told us it was a Puma thread - the one actually working requests, meaning it’s “the Rails thread.”

So that question is answered.

Next we wanted to know more about that ActiveSupport::SubscriberQueueRegistry object.

We went looking at the stable branch for the version of Rails we’re currently on (6.1) and found that it’s basically a per-Thread Hash used to store lists of ActiveSupport::Subscriber instances, based on the event name.

And ActiveSupport::Notifications::Event objects are pushed onto and popped off that per-thread, per-event-name list (Array) as ActiveSupport::Notifications.instrument blocks are run.

Going back to sheap we can see that in action - the SubscriberQueueRegistry instance holds a reference to a Hash, which has references to some String and Array objects.

irb: asr = $after.at("0x55adeeb31798")

===> <OBJECT 0x55adeeb31798 ActiveSupport::SubscriberQueueRegistry (1 refs)>

irb: asr.references

===> [<HASH 0x55adeeb31748 (6 refs)>]

irb: asr.references.first.references

===>

[<STRING 0x55adeb01afb0 "ActiveRecord::LogSubscriber-9300">,

<ARRAY 0x55adeeb314f0>,

<STRING 0x55adf09ffaf8 "Lograge::LogSubscribers::ActionController-276520">,

<ARRAY 0x55adf09ffb20 (1 refs)>,

<STRING 0x55adf4a029e8 "Lograge::LogSubscribers::GRPCWeb-276540">,

<ARRAY 0x55adf4a02a10>]

The only Array with anything in it was in the middle of the flame graph with addresses ending ffb20.

It held a reference to a single ActiveSupport::Notifications::Event instance.

And that instance then held a reference to the Array of 32k+ other objects.

If we look at the code for that Event class, we see it contains a single @children ivar Array.

So, it’s an Array of what then?

Child Event instances, presumably.

We grabbed a couple to confirm.

irb: event = asr.references.first.references[3].references.first

===> <OBJECT 0x55adf4fdda70 ActiveSupport::Notifications::Event (4 refs)>

irb: children_ary = event.references.last

===> <ARRAY 0x55adf4fdda20 (32067 refs)>

irb: children_ary.references.first

===> <OBJECT 0x55adf523e9e0 ActiveSupport::Notifications::Event (4 refs)>

irb: children_ary.references.first.references

===> [<STRING 0x55addbe19ae0 "redirect_to.action_controller">, <HASH 0x55adf523e9b8 (10 refs)>, <STRING 0x55adedec2188 "19b24a9862f0e692859e">, <ARRAY 0x55adf523e990>]

Sure enough, the huge Array is a list of “children” Event objects.

This first child Event was for a redirect_to.action_controller event name.

And it would have been pushed onto this Event#children list because some other Event was already on the event_stack.

At this point our intuition was telling us something was wrong here - these Events were incorrectly being put on this #children Array.

We wanted to know what else that child Event had to tell us, like what is in that Hash it’s holding a reference to?

irb: first_child = children_ary.references.first

===> <OBJECT 0x55adf523e9e0 ActiveSupport::Notifications::Event (4 refs)>

irb: first_child.references[1].references

===>

[<OBJECT 0x55adf4e54e38 ActionDispatch::Request (10 refs)>,

<STRING 0x55ade7965bf0 "PresentationController">,

<STRING 0x55adf4250150 "view">,

<HASH 0x55adf4f6d4f0 (507 refs)>,

<OBJECT 0x55adf4f5c998 ActionDispatch::Http::Headers (1 refs)>,

<STRING 0x55adf4cccca0 "GET">,

<STRING 0x55adf4f6e8a0 nil (1 refs)>,

<HASH 0x55adf5378c70 (1 refs)>,

<ARRAY 0x55adf5378ae0 (2 refs)>,

<OBJECT 0x55adf5382310 (0x55adf5400058) (4 refs)>]

Whoa, an entire ActionDispatch::Request object. 😱

The request that was being redirected.

What did that look like?

Maybe we could reproduce this locally?

irb: first_child.references[1].references.first

===> <OBJECT 0x55adf4e54e38 ActionDispatch::Request (10 refs)>

irb: first_child.references[1].references.first.references

===>

[<HASH 0x55adf4ccced0 (222 refs)>,

<HASH 0x55adf4f6d4f0 (507 refs)>,

<STRING 0x55adf4f6e8f0 "/view/abc123def456?parked=junk&parking=junk&parseSchema=junk&… eliding more param tampering … &password=[FILTERED]& … &portbl=junk">,

<STRING 0x55addc723e60 "https://">,

<STRING 0x55adf4cccca0 "GET">,

… # eliding more stuff

<OBJECT 0x55adf4f5c998 ActionDispatch::Http::Headers (1 refs)>]

Well look at that.

It‘s a mostly valid request, to an actual Route in the app, with a valid public ID of a resource (changed to protect the innocent, of course).

What’s not legit is the parameter tampering - the work of a scanner very likely.

What especially stood out was the password=[FILTERED] , which means something was catching the request and cleaning sensitive info we’d not want in logs and such.

🔄 Reproduce it

With the questionable looking query path and params, we opened the production app in an incognito browser window and made a request. We were met with a 500 server error.

🎉 Success! ✨

Logs confirmed that it was an error.

Specifically a URI::InvalidURIError.

The logs also recorded which Dyno the request had hit.

We popped open our memory graphs and by sheer luck, that Dyno was currently showing normal memory usage.

We put a temporary deploy freeze in place so we could monitor memory usage for a few minutes and before long the trend line was clear.

We had a leak.

With some confidence we were leaking Event objects as part of the ActiveSupport::Notifications tooling, we still wanted to reproduce it locally so we could step through the code and see what was going on.

Leaning on a little bit of binding.pry and even more puts debugging in the activesupport Gem (if you don’t know, bundle open <gem name>, check it out), we eventually reproduced the situation locally.

And with a backtrace!

The error’s backtrace pointed to the uri Gem (part of Ruby’s standard library), which was being used by Bugsnag’s Bugsnag.cleaner.clean_url method, as part of a ActiveSupport::Notifications.subscribe block.

Which sounded awfully familiar.

A little more debugging and stepping through the Bugsnag and ActiveSupport code and We’d narrowed in on the problem(s).

💡Understand the cause

There were two related problems, right next to each other.

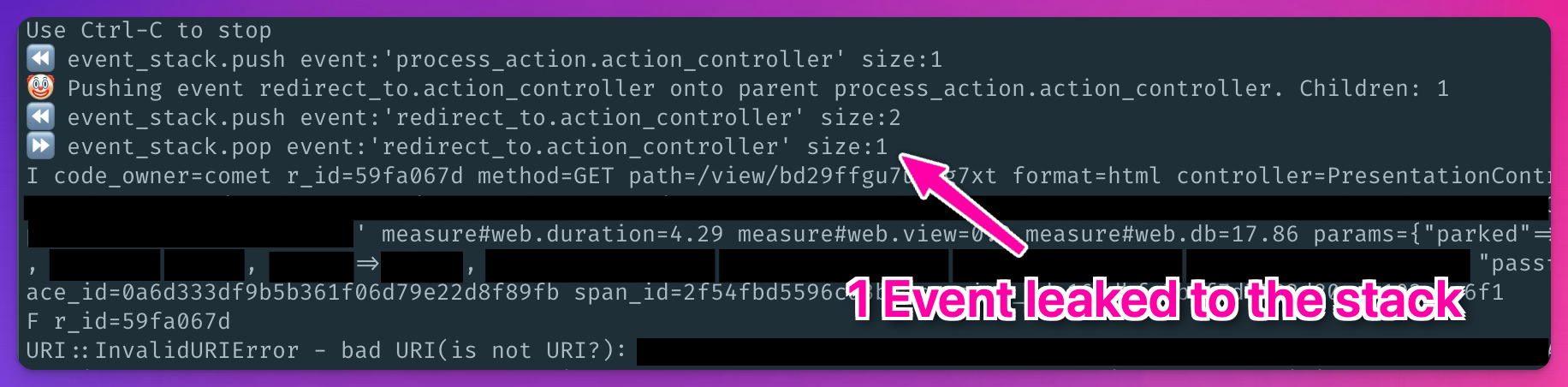

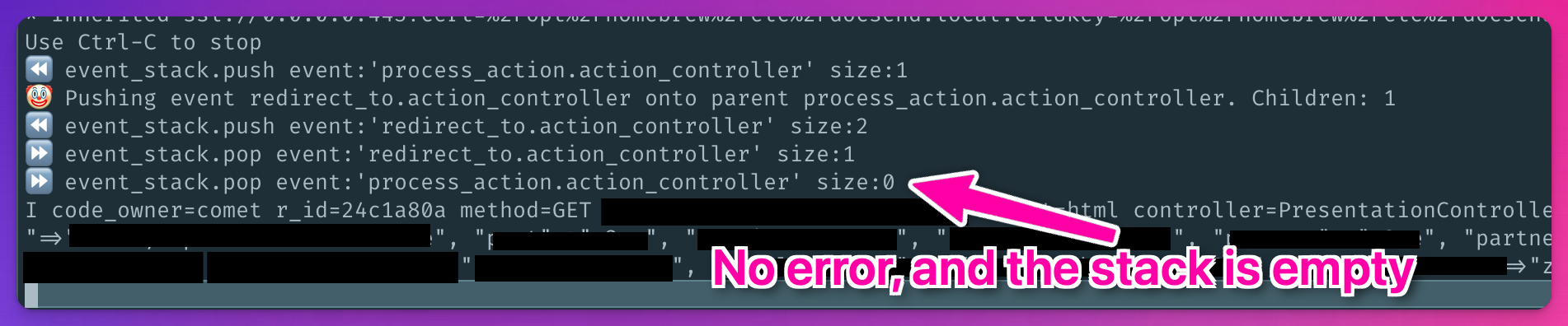

The first was to do with ActiveSupport::Subscriber’s notion of #children and how it was tracking them.

John Hawthorn explains it, and had already fixed it in Rails 7.1 (we were on 6.1 at the time).

This alone wasn’t the cause our memory leak.

But in combination with a change to the Bugsnag Gem to start cleaning URLs Rails breadcrumbs, it manifested in a leak.

The Bugsnag change relied on URI to parse and then redact certain sensitive query strings.

The URI is rightfully pretty strict about what it considers a valid URI, raising an error when given an invalid URI.

Meaning the ActiveSupport::Notifications.subscribe block in the Bugsnag Gem could now raise an error while processing an ActiveSupport::Notifications::Event.

And raising that error meant that the parent Event wasn’t getting popped off the Subscriber#event_stack.

So the parent Event was sticking around (leaking memory), and it was still holding a reference to the child Event via its #children Array (leaking more memory).

John’s change not only removed the Event#children concept, but completely removed the shared-Array used to track Events.

The source of both leaks, gone in a single PR. 👏

🛠️ Fix the problem

John’s fix for newer Rails means this is no longer a problem… on newer Rails versions.

But we weren’t there yet.

As luck would have it, just days prior Bugsnag had fixed the Bugsnag.cleaner.clean_url method to not raise an error on invalid URIs.

Our fix, in the short term, was to upgrade the Bugsnag Gem to a version that included the “don’t raise an error while trying to clean an invalid URI” change. Longer term we’re upgrading Rails versions.

🤡 It’s me, hi, I’m the problem, it’s me.

(with apologies to Taylor Swift) 🙏

Funny story.

It turns out that Bugsnag change was introduced to our code base right around when the first memory spikes occurred.

We’d upgraded from v6.26.0 to v6.26.1 to fix a deprecation warning for a different dependency.

We (by which I mean me, I, myself) audited this change too.

And dismissed it as not being related because… why the heck would redacting part of a URI, something the Bugsnag Gem already did in other places, cause such a bad and sudden memory leak!?!?

Well… something-something-something assumptions. 🤦